The goal of this project is to use a human-centered approach grounded in current social science theory and frameworks to add context to and further develop existing placement stability and risk assessment models that are used to aid overworked social workers in making, explaining, and standardizing their decisions.

| Slide

|

Transcription

|

|

Hello, my name is Charlie Repaci, and I am a Senior at Simmons University. My project is titled Developing Ethical Algorithms for Placement Stability in the Foster Care System, which I worked on under the guidance of doctoral student Devansh Saxena and Dr. Shion Guha.

|

|

In this presentation, I’ll go through the work I have done the last 10 weeks, starting with an introduction and background, then moving on to objectives, methodology, results, discussion, and plans for future work.

|

|

Social work is a high-stress and mostly thankless job. Social workers are understaffed, underfunded, and overworked. It’s due to this high stress with little reward that there is such a high job turnover rate.

This high turnover rate also influences the cases that are being investigated, and the care that each receives can be disjointed. A social worker newly assigned to an active case then has to do extra work of familiarizing themself with it, which can also make the process take longer. Children can be moved from a foster home not because there is a difficulty in the home, but because there is no longer a social worker in the area to check in on them. For greater placement stability and better outcomes for children in the foster care system, social workers need more support.

It is for this reason that algorithms were first introduced into the field: to aid social workers in making, explaining, and standardizing their decisions.

|

|

Though the technical definition is any process with a well defined sequence of steps, what algorithms actually are in practice is widely debated and varies depending on the field and context. Algorithms in social work are often not of a strictly computational nature; they start out as psychometric or communimetric assessment matrices that have evolved to become guidelines on data collection which are then used to make decisions about future cases. They are becoming increasingly entrenched in various social programs, and while they were originally introduced for the purpose of enhancing and rehabilitating existing systems, they are often used without due consideration of the ethical concerns their application raises or the framework on which social fields usually rely.

Family and community stakeholders who comprise the bulk of Child Protective Services (CPS) inquiries are not placated by the inclusion of algorithms, and mistrust them just as much as they mistrust the rest of the system, in part because of the perception that they are unknowable, and in part because they have not shown that they decrease bias in the system as they were meant to. Additionally, social workers are often suspicious of the new technology and tools that are intended to aid them. This is for many reasons, including that these algorithms were developed without users’ inputs and so don’t adequately address their needs, and often contradict their theoretical assessments.

In addition, algorithms are often misappropriated for purposes outside their original scope, and don’t take well to the changes. For example, the Child and Adolescent Needs and Strengths (CANS) model, originally created to assess the needs of a child, is being used to calculate compensation for caretakers so that when a child is doing better than they were at their previous assessment due to the caretakers’ efforts, compensation is lowered.

|

|

The perception of algorithms as unbiased paragons of decision making with god-like insight has contributed to their use without due process and ethical consideration. Algorithms are not implemented in a vacuum. Their input can be biased in a multitude of ways including the way the data was collected, the exclusion, intentional or not, of other data, the designer’s bias and other such issues, which in turn can reproduce and in some cases may even worsen the same biases that they are implemented with the intention of exterminating. This remains, however, unrecognized by many policy and decision makers.

Systemic, regular, analysis of algorithms in social work (and many other fields for that matter) to ensure performance after their implementation are nonexistent. There is no standardized framework for testing for ethics in algorithms in the design process and after implementation, and a formalized process for third party inquiries would be ideal, however, this measure is not in place either.

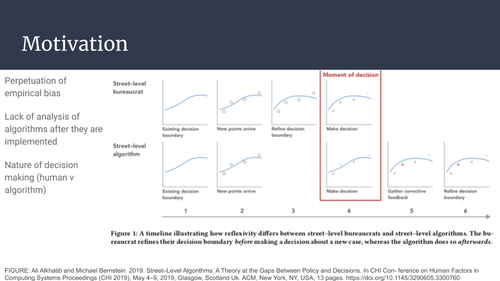

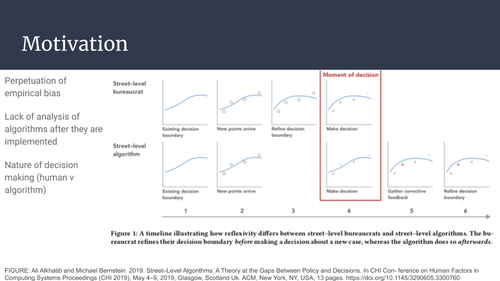

Moreover, algorithms tend to struggle in high-consequence fields like child welfare, and this is inherent in the way they make decisions. While a social worker is capable of refining their decision criteria before making decisions on a complex case, an algorithm can only update its decision boundary in response to an incorrect classification, which has deep ethical implications. You can see in the figure above the difference in how a human would make a decision and how an algorithm makes a decision. Because of this, the design of an algorithm should include the ability to identify edge cases that require greater human attention and at a policy level, there should be room for repeals and recourse.

Overall, it is clear there is a need for assessment, development, and accountability of algorithms in child welfare systems.

|

|

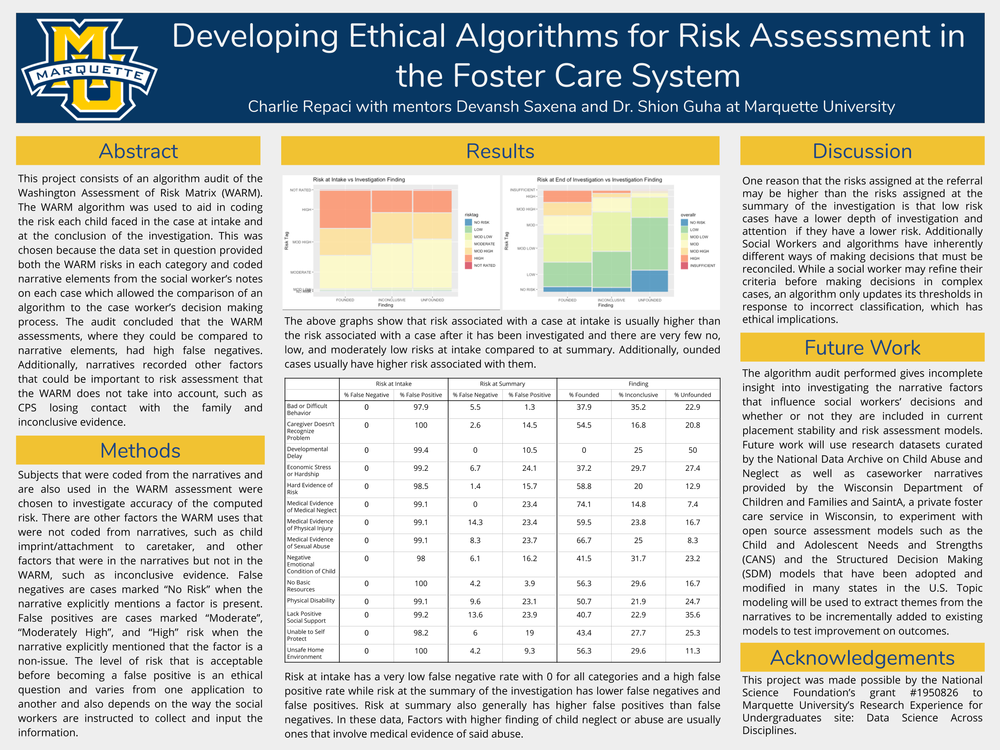

This project was an audit of the Washington Assessment of Risk Matrix (WARM), which was used to aid in coding the risk each child faced in the case at intake and at the conclusion of the investigation in planning future accommodations. The purpose of this was to compare the results of the algorithm to the coded narrative elements in the case notes that a social worker writes and contrast not only the risk differences and outcomes but also the subjects of interest and what’s being measured.

|

|

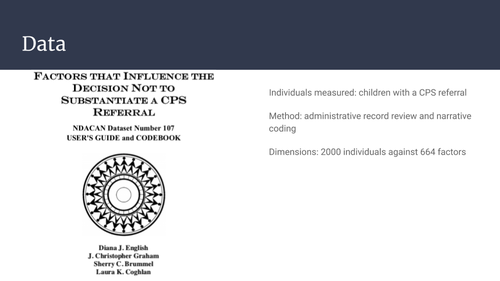

Although we started the project with four related data sets, we focused on just one due to the time constraints of the project. The Phase I dataset came from another study of case notes which aimed to determine factors associated with the unsubstantiation of CPS referrals. In this dataset, the individuals recorded were children with a CPS referral. Data was gathered through the review of administrative records. 3,000 CPS referrals were randomly selected from one year of records (a total of 7,701 referrals). Cases excluded from the review and narrative coding included those with "limited access, information only referrals, risk tag pending, licensing, third party perpetrators, a sibling as the perpetrator, duplicate referrals, and referrals where there was no identifiable victim”. The study then coded the case notes of 2,000 referrals, the final number of records. My mentors provided this dataset from the National Data Archive on Child Abuse and Neglect (NDACAN).

|

|

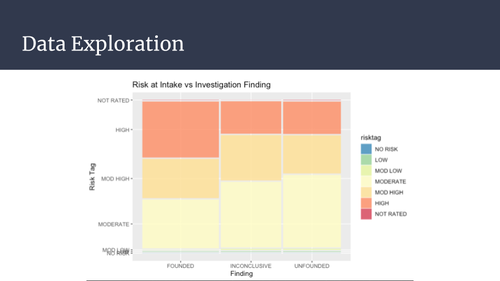

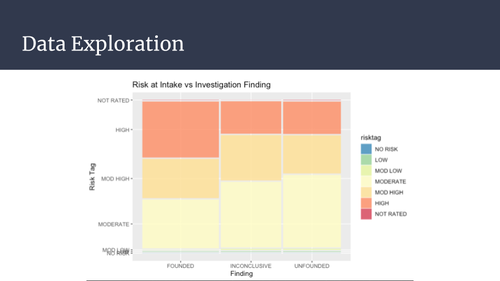

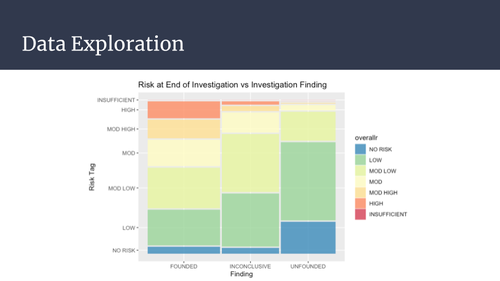

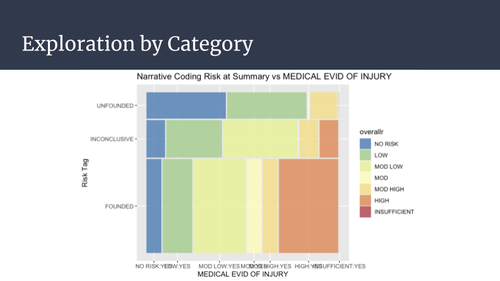

The above graph shows risk associated with a case based on the referral compared to the outcome of the case. Risk Tag is the output total risk of the WARM, and the finding is the conclusion of whether or not the social worker determined that past abuse had occurred and the child was at risk of it happening again. Founded cases go on to other social services to address their specific needs and lessen risk to the child.

These are clearly mostly at the moderate through high levels of risk. Additionally, cases that are founded in general are associated with higher risk. One reason that the risks assigned at the referral may be so high is that low risk cases have a lower depth of investigation and attention. If a referrer wants the case to be treated to a higher standard of investigation they must ensure that the reported risk is greater.

|

|

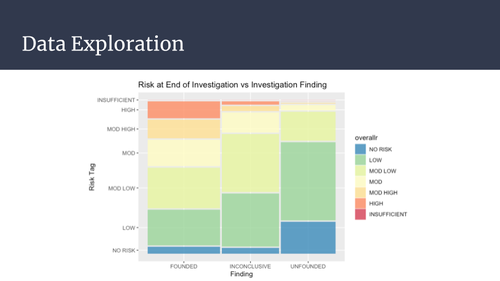

This graph shows the risk assessed at the summary of the investigation compared to the finding of the referral. In comparison to the previous graph, there are far fewer moderate through high risk cases. Again, founded cases are in general associated with higher risks. It is interesting to note that there are cases with ratings of no risk that are founded and cases with moderate through high risk that are unfounded.

|

|

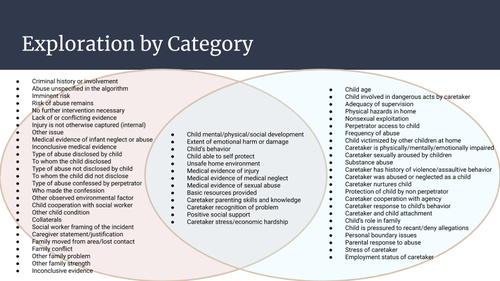

You don’t have to read all this, but I wanted to make sure it was all in here. Categories that are mentioned specifically in the narrative in addition to being scored in the WARM are either requiring further elaboration of the score (for example, the social worker may feel that the WARM category is too restrictive), or they are the pieces that they feel are essential to the case, more so than the other axes that the WARM measures. The many narrative categories that go unmentioned by the WARM but that social workers feel are important to mention in the case notes were numerous, and mostly focused on inconclusive evidence, abuse types not accounted for by the model, specifics about how the referral was made, and other family conflicts that could endanger the child.

|

|

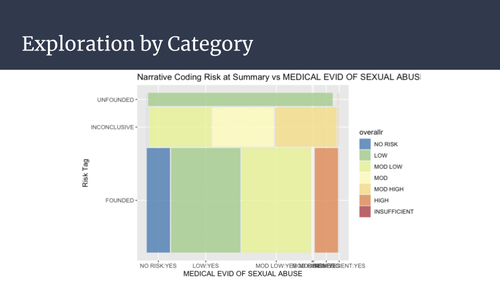

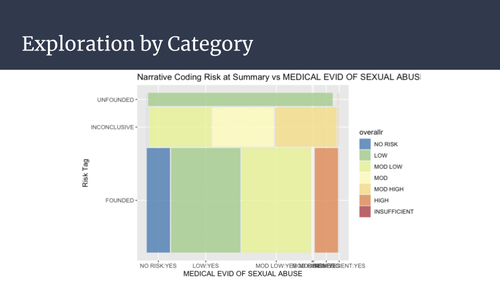

I’m not going to show you all of them, but here are a few of the graphs of the variables that were both in narrative coding and the WARM. Most cases with medical evidence of sexual abuse are founded, and those that are founded in general have higher levels of risk.

|

|

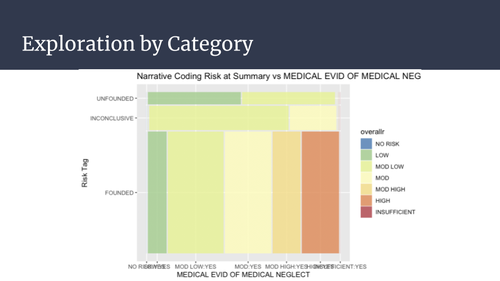

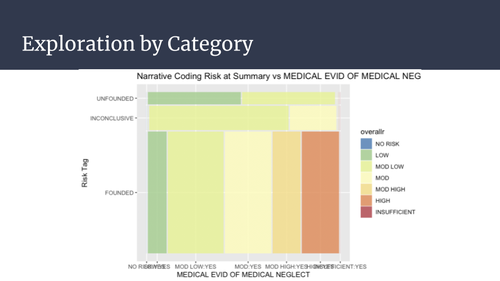

This graph of medical negligence against risk shows a similar pattern, with more founded cases than not, and higher risk associated with founded cases.

|

|

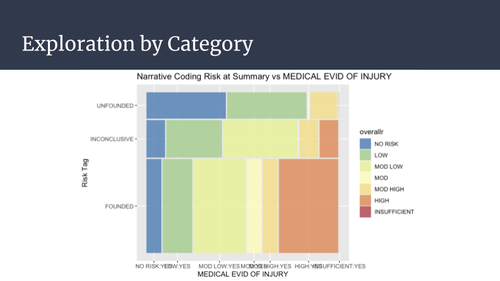

This graph of medical evidence of injury shows that same pattern of finding and risk. You can also note, however, that many cases with explicit injury mentioned are marked no risk even if they are founded. This is most likely to be a failing of the algorithm or but it could also be an example of a caseworker determining that while abuse happened there was no risk of it happening again.

|

|

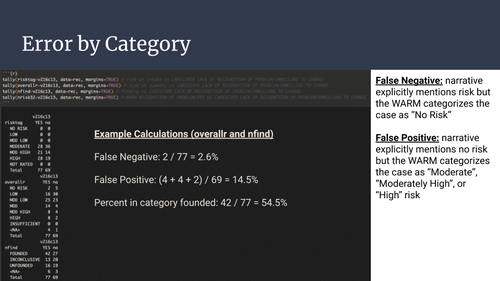

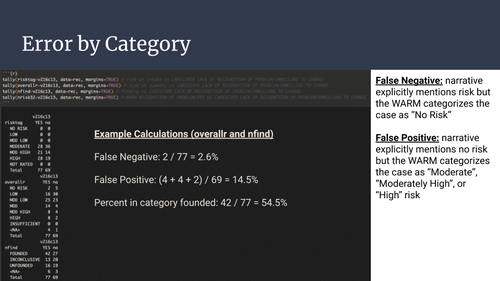

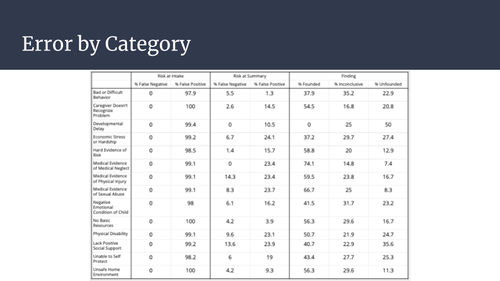

Subjects that were coded from the narratives and are also used in the WARM assessment were chosen to investigate accuracy of the computed risk. The level of risk that is acceptable before becoming a false positive is an ethical question and varies from one application to another and also depends on the way the social workers are instructed to collect and input the information. Cases with a low or moderately low assigned risk also have a low standard of investigation; that is, CPS reviews prior involvement and collateral contacts to determine if further investigation should occur. Risk levels of “Moderate” or higher demand a high standard of investigation, including a review of prior CPS interaction, collateral contacts, interviews, and other assessments. Based on this, the false positive threshold was chosen as anything categorized as “Moderate” or higher in risk.

In this section, we’re more interested in false negatives than false positives, as a false negative is a disregard of the explicit risk, however the false positive may be falsely positive because of another category of risk that the case is high in that brings the overall risk score up.

|

|

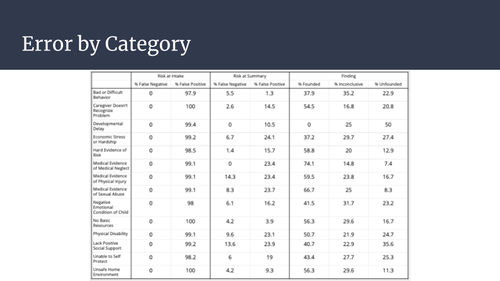

These are the categories that the narrative notes and the WARM had in common with each other. Using the narrative rates as the “true” presence of risk in the case, we can test the WARM accuracy by variable. Risk at intake has a very low false negative rate with 0 for all categories and a high false positive rate while risk at the summary of the investigation has more accurate rates, but this is expected in risk at intake, because, if you remember, the risk distribution at intake, nearly all cases are rated “Moderate” or higher, and in these categories specifically, there were no cases rated lower than “Moderate”. The risk at summary has more usual false negatives and positives.

In terms of findings, those which had higher percentages involved hard or medical evidence of some kind. Factors that were not directly involved in abuse, such as developmental delay, difficult behavior in the child, and economic stress or hardship, had lower percentages of findings and were associated with lower risks than some of the other variables.

|

|

The main limitation of this work is that the WARM was replaced by the Structured Decision Making model and thus these findings cannot be extended directly to modern cases. The SDM uses more machine learning techniques than the WARM and addresses some of the shortcomings, but still has many of the same issues as its predecessor including racial disproportionality and bias in other areas. Notably, however, it also includes a discretionary override for the caseworker to increase the risk with specified justification if they feel the algorithm has judged risk of future harm to be too low.

|

|

The algorithm audit performed gives incomplete insight into investigating the narrative factors that influence social workers’ decisions and whether or not they are included in current placement stability and risk assessment models and future work would continue the research. Caseworker notes from the Wisconsin Department of Children and Families and from SaintA, a private foster care service, would be analyzed with topic modeling to extract latent themes. Open source placement stability and risk assessment models such as the CANS and SDM models, which have been adopted and modified in many states, would be used also. This would allow experimentation with adding narrative themes to existing placement models to test improvement in outcomes.

|

|

|

|

I’d like to thank my mentors for all their help on this project, the NSF for funding it, and Doctors Brylow and Madiraju for hosting and putting this all together. I had a lot of fun on the project and really enjoyed my summer. I’ll take any questions at this time.

|